Websites are very crucial especially if you’re promoting something such as events, products or informational blogs. The internet is very powerful that with just a single click or a single share, your website can reach millions of users over the internet.

Moreover, you get a better chance of gaining more viewers if your website made it on the first two pages of the Google results.

If you’re promoting your blog about web designs and viewers are about to search Google about the “best web design blog,” viewers will get an approximate 991,000,000 results.

Clearly, no one would navigate a hundred-thousand-page just to get what they need. These viewers will only check the first two pages of Google where the most relevant sources are found.

Websites are very crucial especially if you’re promoting something such as events, products or informational blogs. The internet is very powerful that with just a single click or a single share, your website can reach millions of users over the internet.

Moreover, you get a better chance of gaining more viewers if your website made it on the first two pages of the Google results.

If you’re promoting your blog about web designs and viewers are about to search Google about the “best web design blog,” viewers will get an approximate 991,000,000 results.

Clearly, no one would navigate a hundred-thousand-page just to get what they need. These viewers will only check the first two pages of Google where the most relevant sources are found.

Googlebot in a nutshell

Rewrite: Relevant Subject: 10 Practical Tips to Enhance My Website’s Pagerank

Googlebot refers to the search bot by Google that will access your web to produce an index. Others may regard it as the “spider.” As long as these crawlers are allowed to visit the page, they will add it to the accessible index. It helps to improve the visibility of your website to user’s search queries. Here are the following things that you should know about Googlebot:-

The crawlers target websites with higher ranks and increase its visibility.

-

The crawlers will never stop accessing your website

How vital is Googlebot for your website?

Why bother on Googlebot optimization if you have perfected the search engine optimization? Googlebot is crucial for digital marketing. It is the perfect commercial solution that will get quality customers in the long run. If you want your business to appear with the most searched and favorite topics, Googlebot optimization is your best friend.

Keep in mind that you should comply with Googlebot optimization before you proceed to SEO. Your quality content, professional-looking web layout, and advertising campaigns will be rendered useless if no one can see it.

Ensuring that your website is accessible by crawlers and it has the most relevant pages will improve your brand campaign. After all, this is what digital marketing is all about. Googlebot optimization helps you to extend your arms to high-quality customers that are looking for the products or services that your company has to offer.

Why bother on Googlebot optimization if you have perfected the search engine optimization? Googlebot is crucial for digital marketing. It is the perfect commercial solution that will get quality customers in the long run. If you want your business to appear with the most searched and favorite topics, Googlebot optimization is your best friend.

Keep in mind that you should comply with Googlebot optimization before you proceed to SEO. Your quality content, professional-looking web layout, and advertising campaigns will be rendered useless if no one can see it.

Ensuring that your website is accessible by crawlers and it has the most relevant pages will improve your brand campaign. After all, this is what digital marketing is all about. Googlebot optimization helps you to extend your arms to high-quality customers that are looking for the products or services that your company has to offer.

The Struggle of Website Publishers: How To Get To Page One

As a website publisher, the main challenge is how to get your website from nothing to something. If you think you can personally write an email to

Google and seek their help in putting your website on the first two pages of their search engine, it will certainly not work. I wish it’s as easy as that, but there are a lot of things to work out before you can really establish your website in that rank.

To have your website included on the first few pages of Google results may seem impossible, but believe me, it’s possible.

You just really need to work on your website content more because the information you put there is the most searchable part for search engines.

You get a greater chance if you keep your website updated because it’s what attracts Googlebots.

Did I just mention Googlebot? Yes, indeed! If you’re quite new to the term Googlebot, here’s what you need to know about it and how Googlebot sees your website.

As a website publisher, the main challenge is how to get your website from nothing to something. If you think you can personally write an email to

Google and seek their help in putting your website on the first two pages of their search engine, it will certainly not work. I wish it’s as easy as that, but there are a lot of things to work out before you can really establish your website in that rank.

To have your website included on the first few pages of Google results may seem impossible, but believe me, it’s possible.

You just really need to work on your website content more because the information you put there is the most searchable part for search engines.

You get a greater chance if you keep your website updated because it’s what attracts Googlebots.

Did I just mention Googlebot? Yes, indeed! If you’re quite new to the term Googlebot, here’s what you need to know about it and how Googlebot sees your website.

Knowing Googlebots Further: What They Are and How They Work

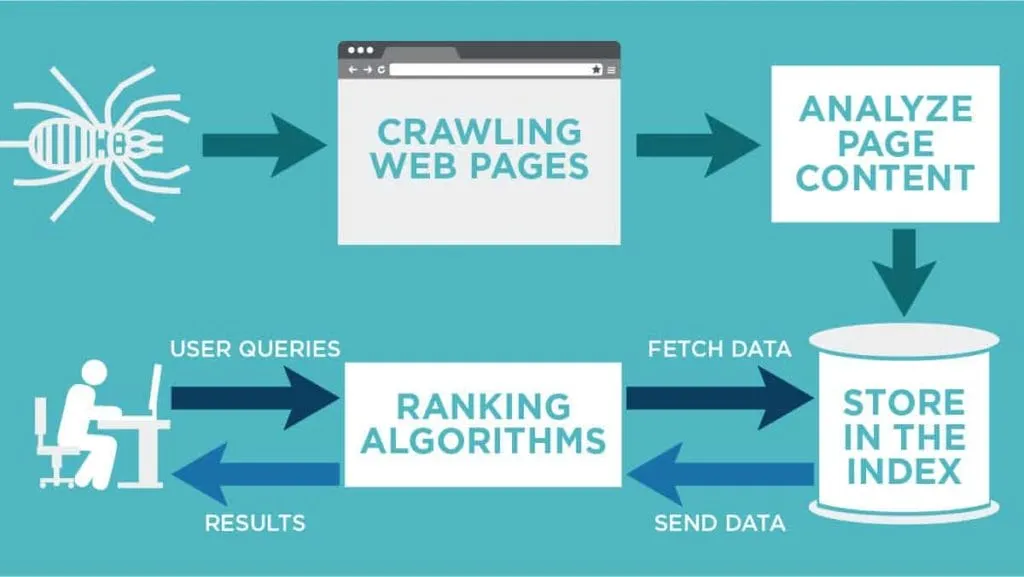

At first, I strongly thought Googlebots are Google employees who work 24/7, searching for new and updated websites all over the internet. It took me by surprise when I found out that these bots are just a computer program established by Google to search tons of websites conveniently and efficiently. These bots are also referred to as spiders or web crawlers.

Talking further about Googlebots, these bots are intended to crawl whatever is there on the web, and they crawl via links. Every bot can find new contents, even updated ones and it’s responsible for suggesting which discoveries should be included in the index.

These bots are very vital because they manipulate the index or what’s called Google’s brain.

Google uses vast computers to release their bots for new discoveries, and these bots use a database of previously discovered links and sitemaps to decide where they go next.

Whenever there’s a new link added on an existing site, these bots immediately add it on the list for next visitation. It will make it easier for these bots to reach your website if you make sure all your links are reachable.

Here are a few more things you need to know about Googlebots:

It took me by surprise when I found out that these bots are just a computer program established by Google to search tons of websites conveniently and efficiently. These bots are also referred to as spiders or web crawlers.

Talking further about Googlebots, these bots are intended to crawl whatever is there on the web, and they crawl via links. Every bot can find new contents, even updated ones and it’s responsible for suggesting which discoveries should be included in the index.

These bots are very vital because they manipulate the index or what’s called Google’s brain.

Google uses vast computers to release their bots for new discoveries, and these bots use a database of previously discovered links and sitemaps to decide where they go next.

Whenever there’s a new link added on an existing site, these bots immediately add it on the list for next visitation. It will make it easier for these bots to reach your website if you make sure all your links are reachable.

Here are a few more things you need to know about Googlebots:

- Googlebots always crawl all websites, but they don’t crawl every page repeatedly. This is when the importance of the consistency of content marketing enters the picture. The more social mentions or backlinks you put on your site, the more you draw attention to bots.

- Googlebots practice the importance of privacy; hence, they access your website’s robots.txt section first and foremost before anything else. If there are pages which you don’t want to keep away from access, these bots will comply and will not include it in Google’s index.

- Googlebots crawl on important page-ranked websites more than others. The greater the authority a page has, the more crawlers it receives. Moreover, the total time given by these bots to your website is what they call the “crawl budget.”

- Googlebots utilize the sitemap.xml to explore further the areas that need to be indexed. As these bots don’t crawl on all the pages of your website, the sitemaps can be advantageous because these maps can advise Google regarding the metadata of categories such as images, videos, news, and mobile.

How Can I Optimize My Joomla Website for Googlebot?

To optimize your Joomla website for Googlebot, utilize a responsive design that ensures seamless browsing across various screens. Implement clean HTML markup, proper meta tags, and relevant keywords to improve search engine visibility. Additionally, optimize loading speed, enhance user experience, and focus on mobile optimization. Explore various beautiful Joomla website designs to create an appealing interface that captivates both users and the Googlebot.

Will Connecting Yoast SEO to Google Search Console affect how Googlebot sees my website?

Yes, connecting Yoast SEO to Google Search Console can impact how Googlebot sees your website. This yoast seo google search console connection allows for better communication between the two platforms, ensuring that your website is being properly indexed and analyzed by Google. It can help improve your website’s visibility and ranking.

Hacks on How to Be an Attractive Website for Googlebots

Googlebots are very helpful, especially in promoting your website further until it qualifies on the relevant section. However, since these are just programmed to do what they are supposed to, there’s no way you can control them. What you can only do is to continuously improve your website until it becomes worthy for a higher rank. It’s very crucial for your website to be indexed as much as possible to help you gain more viewers from time to time. Here are some hacks on how to have a Googlebot optimized site:

- Don’t focus too much on looking fancy – Fancy Javascript, DHTML, Ajax content and frames are fascinating but not for Googlebots. These bots are struggling in crawling on websites with fancy features as these features keep the bots from seeing your website in its text browser form.

- Keep in mind to create fresh content – Googlebots love fresh content. It’s important to publish up-to-date contents, regardless if your website is low-ranked because these bots will crawl on your content more frequently.

- Introduce infinite scrolling for your pages – When you introduce infinite scrolling on your website, you’re certainly boosting your chance to be noticed by Googlebots. This kind of scrolling doesn’t limit the chance of these bots to crawl on your website further.

- Make use of Internal Linking more often – Googlebot sees internal linking as their map as they begin crawling on your website. The more tight-knit and incorporated your linking structure is, the more these bots will explore your website.

-

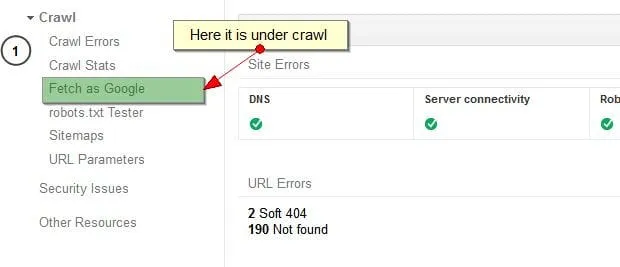

Using fetch as Google

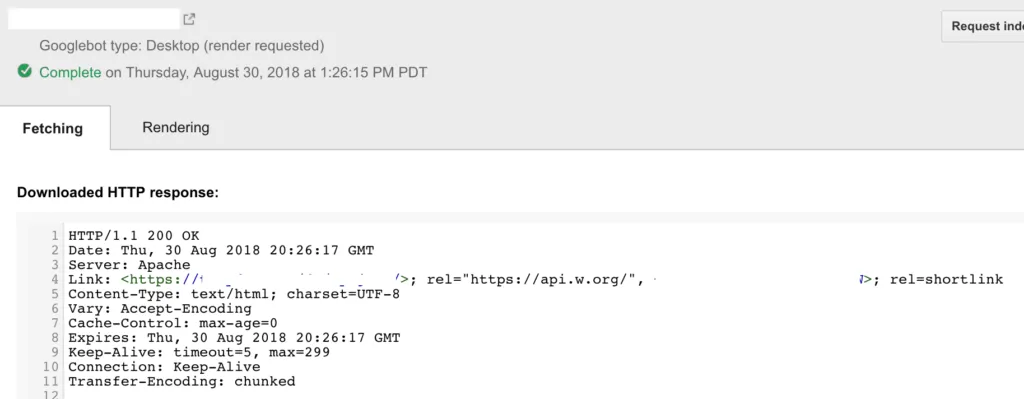

Fetch is a Google tool that will allow you to determine if Google can crawl into your website. This handy tool works by simulating crawl similar to how the Googlebot works. Here’s how you can use it:

Step 1:

First, you must run the fetch. Enter the path component of your site’s URL that you want to test. If you leave the textbox empty, it will automatically direct to your homepage.

Important note: Fetch does not work on redirect pages. Make sure to do it manually. A status description will appear and sends instructions to do so.

Step 2:

This step will allow you to select the Googlebot type that you wanted Fetch to execute on. It will render your fetch request and will affect Google’s crawler in making the fetch. Here are the classifications that you can choose from:

Desktop

Fetch is a Google tool that will allow you to determine if Google can crawl into your website. This handy tool works by simulating crawl similar to how the Googlebot works. Here’s how you can use it:

Step 1:

First, you must run the fetch. Enter the path component of your site’s URL that you want to test. If you leave the textbox empty, it will automatically direct to your homepage.

Important note: Fetch does not work on redirect pages. Make sure to do it manually. A status description will appear and sends instructions to do so.

Step 2:

This step will allow you to select the Googlebot type that you wanted Fetch to execute on. It will render your fetch request and will affect Google’s crawler in making the fetch. Here are the classifications that you can choose from:

Desktop

- For web pages, it will use the Googlebot crawler.

- For pictures, it will use the Googlebot image crawler.

- For advertising homepage, it will use the Google Adsbot crawler.

- Upcoming- utilizes the Google Smartphone crawler updated version.

- Current- uses the traditional Google Smartphone crawler.

Step 3:

You can now render your request. Upon executing, it will show the HTTP response of your website and the platform that you are fetching on. It applies to images as well. You can use this to examine any visual differences on how Google sees your website and how the users see it. Upon completion of the request, your fetch history table will gather the date. You will see the success and failure rates in history. If you want to know additional details on failed fetches, you can request for it.Important note: You have 10 fetches as your regular quota.

-

Using Google search simulator